Deborah Lupton (University of Canberra) and Mike Michael (University of Sydney)

Many sociological questions are raised by big data. How, when and where are people exposed to, or begin to engage with, big data? Who are regarded as trustworthy sources of big data, credible commentators upon, and critics of it? How do big data reflect, mediate and respond to folk knowledges about, for instance, the use and misuse of information? What are the mechanisms by which big data systems and their development are opened to public scrutiny, or which allow for the impact of the voices of publics’? Members of the public and regulatory agencies alike are attempting to make sense of the interactions between small and big data and the rapidly growing ways in which these data are purposed and repurposed in a constantly shifting context in which some people’s life chances and opportunities are enhanced while those of others are limited by the digital data that are generated about them.

In a pilot project conducted in Australia in late 2014, we set out to experiment with some approaches to eliciting people’s understandings of big data. We conducted six focus group sessions with Sydney residents, each with 6-8 members of diverse socioeconomic backgrounds and ages. We wanted to go beyond the usual approach of simply asking questions of people. We decided instead to experiment with employing cultural probes to stimulate thought, discussion and debate. Cultural probes have been frequently used in design but rarely in sociological research. Because they provoke new ways of thinking about the social world, but we think they can help people to better engage with the impact of digital devices, the gathering of personal data that they facilitate and the politics of big data.

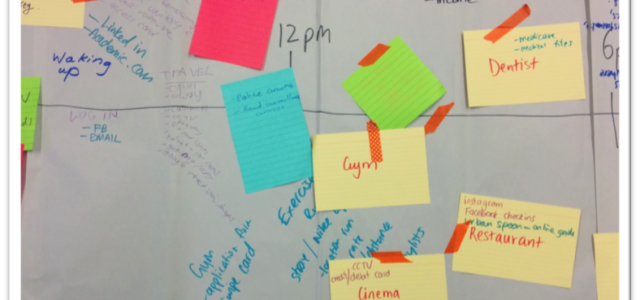

We decided to ask focus group participants to engage in collaborative activities or tasks, and then to discuss their experiences of these tasks within the group. These tasks included working together to draw a timeline of a typical person’s day and adding the ways in which data (digital or otherwise) may be collected on that person (the Daily Big Data Task); a task in which a demographic profile of a particular individual was provided and participants were asked how these details may be generated using digital technologies (the Digital Profile Card Game); and asking paired participants to design data-gathering devices: one with which they would usefully collect any kind of data about themselves, and one for collecting data on another person (the Personal Data Machine). Following each task we asked the group to reflect on it and discuss its implications for individuals’ experiences of being data subjects. Our research data comprised the material artifacts that were generated from these probe tasks as well as the tapes of the group discussions during and following the completion of each task (see image used as header for this article for an example of the Daily Big Data Task artifacts).

When reviewing the tape transcriptions, it was clear that many of the participants had some awareness of the diverse ways in which surveillance technologies; mobile devices, search engines and social media sites collect information about people’s activities. In Group 3, for example, as the group members were completing the Daily Big Data task, they talked about CCTV cameras in public spaces, public transport smart cards, smart phones (that could be hacked), digital tracking of postal deliveries by Australia Post, digital payment systems, smart mattresses and wearable devices for tracking sleep patterns and other biometrics, Wi-Fi providers having access to geolocation and internet browsing practices, Facebook tracking users’ likes and preferences, Google tracking browsing and searches, credit card use, gyms tracking use of their facilities by members and satellites tracking people’s geolocation.

In the participants’ accounts it was evident that the context of the use of personal data was vital to judging how ‘scary’ the implication of digital data surveillance may be. In one of the discussions following the Daily Big Data Task, a male participant had this to say: It’s how the information is controlled and accessed. To me that’s the biggest problem about this whole Big Brother thing is not the collection of information, because that’s just going to happen. There’s no way we can stop that. In a lot of ways that information is very useful. It might be – to law enforcement authorities or whoever – but as long as only the right people are allowed access to that. Therefore there have to be strict controls on controlling the information and allowing access to it. I think it’s too loose at the moment.

For a female participant, the knowledge that ‘some people out there know as much about you as you know about yourself’ is ‘scary’. She observes that ‘there is a lot going on that we don’t know’ in terms of how other actors are accessing people’s personal data. Another male participant noted that it ‘depends on who’s got the data’. Providing the example of a person with severe depression, he commented that if others knew this information, then they might be able to provide emotional support or useful services. On the other hand there are actors or agencies that might use this information to discriminate against a person with depression, such as potential employers.

When the participants were asked to design and explain to the group their ‘Personal Data Machine’, a willingness to use digital devices to participate in ever-more intrusive forms of surveillance of oneself or others that may allow others to gain greater insights into the participants’ lives was apparent. Thus, for example, one pair designed a dream-recording app that would allow them to remember their dreams the next day. They went on to describe how this could be linked to a dating app, so that prospective couples could share each other’s dreams and perhaps work out how compatible they were. Another pair discussed a data machine that could monitor the social interactions of people’s partners, so that the user could determine if too great a level of attention was being paid by their (possibly cheating) partners to other people. A third Personal Data Device invented by another pair engaged with the Internet of Things, interacting with all the machines in one’s kitchen and eliciting information about food consumed and ready to eat, dishes washed and so on. The associated app would know exactly what food users were preparing and eating and would then provide dietary and nutrition advice. This device was one of several designed by other pairs that monitored biometrics or food intake and then provided advice for how people should live to achieve good health, weight loss or physical fitness, taking on a disciplinary or advisory role.

It has often been asserted in media and communication studies that people’s concepts of privacy have changed in response to the sharing of personal information that now takes place on social media sites. Yet we may be seeing a transformation in attitudes in response to the controversies and scandals in relation to big data that have received a high level of public attention over the past two years, potentially reshaping concepts of privacy again. Whistle-blower Edward Snowden’s revelations about national security agencies’ digital surveillance of their citizens, the Facebook and OKCupid experiments, the nude celebrity photos on iCloud being hacked, for example, have publicised the ways in which people’s personal data may be used, often without their knowledge or consent.

None of our participants mentioned these scandals and controversies directly. But what is emerging from these focus groups is evidence of a somewhat diffuse but quite extensive understanding on the part of the participants of the ways in which data may be gathered about them and the uses to which these data may be put. It was apparent that although many participants were aware of these issues, they were rather uncertain about the specific details of how their personal data became part of big data sets and for what purposes this information was used. While the term ‘scary’ was employed by several people when describing the extent of data collection in which they are implicated and the knowledge that other people may have about them from their online interactions and transactions, they struggled to articulate more specifically what the implications of such collection were for their own lives.

We found that the participants tended to veer between on the one hand recognising the value of both personal data, and the big aggregated data sets that their own data might be part of, particularly for their own convenience. While on the other hand expressing concern or suspicion about how these data may be used by others. As the man we quoted above commented, ‘in a lot of ways information is very useful’. He acknowledges that he may have little control over, or even knowledge about, what information is collected on him. But for this participant, and for some others, the ways in which this information is repurposed is also important. Yet, as evidenced by some of the devices and discussions in relation to the Personal Data Machine, the lure and promise of generating reams of personal information about the self or others in one’s life appears in some ways to obviate the knowledge that the participants articulated about ‘Big Brother’-type or purely commercial surveillance.

It is easy to be seduced by the promise of more detailed and regular information about oneself or to be able to exert control over others by collecting data on them. The major question is where the line should be drawn: when does digital surveillance become too intrusive, scary or creepy? This is the issue with which our participants are grappling and which has significant implications for government attempts to both use and regulate the cargo cult of big data.

Deborah Lupton is Centenary Research Professor in the News & Media Research Centre, Faculty of Arts & Design, University of Canberra. Her latest books are Medicine as Culture, 3rd edition (Sage, 2012), Fat (Routledge, 2013), Risk, 2nd edition (Routledge, 2013), The Social Worlds of the Unborn (Palgrave Macmillan, 2013), The Unborn Human (editor, Open Humanities Press, 2013) and Digital Sociology (Routledge, 2015). A new book on the sociology of self-tracking is forthcoming with Polity. Her current research interests all involve aspects of digital sociology: big data cultures, self-tracking practices, the use of digital technologies in pregnancy and parenting, the digital surveillance of children, and digital health technologies. Mike Michael is Professor of Sociology and Social Policy in the School of Social and Political Sciences, University of Sydney. His interests include the relation of everyday life to technoscience, biotechnological and biomedical innovation and culture, and speculative methodology. He has published widely on such topics as mundane technology and social (dis)ordering, animals and society, publics and technoscience, and design and sociological method.