Jeffrey Alan Johnson (Utah Valley University)

There is in the world of big data an assumption that data is reality, an objective representation of the world it purports to represent. If the data says that Peter’s address is “31 Spooner Street,” that means that there is a residence at that address, and Peter lives in that residence. If it lists Peter’s city and state as “Quahog, Connecticut” rather than “Quahog, Rhode Island,” then there is an error in the data that can be corrected so that the data accurately represents reality. There is no choice about the matter; the data is or is not correct.

But what if data isn’t an objective reflection of reality? My recent work on data systems, based on an examination of the student information systems at Utah Valley University (UVU) in the United States, has explored the many ways in which data reflects not objective concepts but rather constructive practices, making the content of a data system one among many possible interpretations of reality, no one of which is inherently correct. When we use data, we are as much reading a book as we are counting real characteristics. And when we create data, we are not just gathering information. We are participating in a political process.

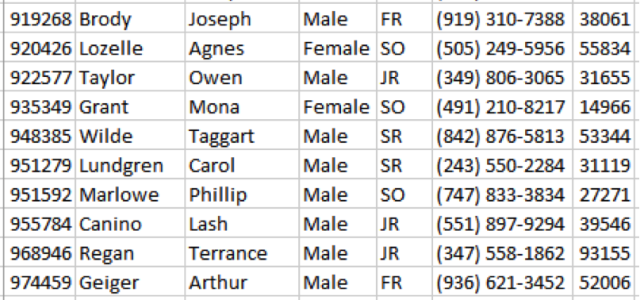

Gender is a good example for seeing data construction at work. The data systems at UVU store only two values for a person’s gender, “Male” or “Female.” Those are not the only possibilities, of course. We could go as far as storing individualized gender performativities by allowing the custom gender descriptions that Facebook adopted in 2015, offer an expanded list of options, or simply allow a null value for the data field. In fact, UVU did allow a value of “Unspecified” until it was deprecated in 2012.

There are no objective criteria for choosing among these ways of storing data. Even if one accepts the gender binary as the way of nature one cannot make an objective decision over whether to include the “Unspecified” value. There may be good reasons for choosing one way or the other, but we cannot say that there is an objectively right decision about that. Hence, reality underdetermines the data; there are always multiple data states that can represent the same reality. If we want the data to give us a single common view of reality, we have to have a process that filters the many possibilities to produce that single definitive data state.

I call that processes the translation regime of a data system. As I have previously described it, the translation regime is “the set of implicit or explicit principles, norms, rules, and decision-making procedures through which single, commensurable data states are selected to represent states of the world.” A data system can’t come into existence without social processes that decide what the rows of a data table will represent, what information about the rows will be stored in which columns and how, and how different tables can be joined to each other. These come from a range of sources. Some are technical, such as business rule and validation tables that define the values that are allowed in a field. But the content of those rules isn’t technical at all. They come from formal data definitions, government regulations, and social norms. The technical standards implement the social standards in a data system, embedding, enforcing, and constraining the social domain’s worldview while hiding the inherent sociality of data behind the veil of the technical.

For a field storing gender data to be limited to specific values, a series of data standards have to be created and implemented, based on what is of interest to those creating the data. These define some data values as valid—“Male” and “Female”—and all others as invalid. The Utah System of Higher Education standard deprecated “Unspecified” in 2012. It did so largely so that Utah institutions’ systems would produce data consistent with the national Integrated Postsecondary Education Data System (IPEDS), which required institutions’ report of gender proportions to sum to 100%. But it also came at a time when transgender recognition was generating controversies within higher education and state politics in Utah. The technical change in the validation table wasn’t done for technical reasons; “Unspecified” was deprecated by a state bureaucracy in response to a mandate from a federal bureaucracy in an environment where such a decision could be seen as supporting the social priorities of key elected officials.

Data systems that reduce gender expression to the gender binary are examples of what I call a normalizing translation. These reduce myriad characteristics to a small set of characteristics that are considered normal; all potential values outside of the accepted ones are nonexistent informationally. There are two other ways that translation regimes select data states. Atomizing translations breakdown sets of related characteristics into separate, unrelated ones, for example transforming intersectional identities like Jewyorican into distinct columns for religion, ethnicity, and place of residence. Unifying translations do the opposite, grouping distinct characteristics into a single value: “Asian and Pacific Islander.”

Data scientists do not often think about our practices as political in nature. But all of the work required to represent some reality in a data system makes data inherently political. Especially as data-driven decisions become norms—even mandates—data scientists are creating the abstract world in which decisions taken place before being implemented in the real world.

The immediate sense in which data translation is political is that the choices made in translating data allocate political power in the real world, not just in the data set. Those who create the translation regime determine which groups do and do not exist, what concepts are available to pursue claims on institutions, which needs can be legitimized and which can be dismissed. The ability to make one’s self, one’s group, and one’s interests legible to the state, organizations, or other individuals is increasingly determined by where one stands in the data.

Thus when a data system, like that at UVU, limits gender expression to cis-gender identities, it eliminates transgender identities from the political community of the university: in the absence of transgender identity data, such students simply don’t exist analytically. Decision-makers might want to support such students’ interests, but they cannot in the absence of data about them where decisions are legitimated by data. Of course, the institutions may not have an interest in supporting some groups, in which case the ability to shape data becomes a key tool for pursuing the institutions’ interests.

But data translation also allocates political power among institutions and arms of the state. The ability to dictate data standards is, in part, the ability to dictate the substance of decisions. The national IPEDS data standard did not formally require the translation UVU uses, but it did require that UVU be able to produce it. The incentive is to design identical systems to reduce both technical demands and compliance workload. Similarly, the ability of state legislators to challenge bureaucratic decisions through oversight processes constrains USHE as it develops system-wide data standards in spite of technical flexibility. In either case, the ability to dictate data standards gives social institutions the ability to generate the data—and thus the decisions—they want.

The examples above show that information presents challenges to justice. The translation regime both makes data a power structure and acts as a power structure itself. There is no alternative to data translation; like any representational process, translation is inevitable in data. But that does not mean that all translation regimes are equal in their outcomes. It is equally possible for information systems to be designed to enhance justice, and data science can play a part in that. The key lesson of political data is that we should understand data as raising questions of justice, and work to build systems that promote social justice.

Information justice includes both procedural and substantive standards that result in information systems that further distributive and relational justice. It sees data systems as social, and thus calls on data scientists to ask social, not just technical, questions about their data. It calls for data systems to be more participatory and inclusive, which can be accomplished not just by including more people in data design but also using algorithms that do not reduce everyone to the average case. It must balance the openness and needed to maintain equal access to data with the privacy protections needed to ensure that individuals are not subject to the arbitrary power of social institution. And it must be a social movement that articulates claims of injustice as much as a philosophical one that articulates principles to address them.

Jeffrey Alan Johnson is the Assistant Director of Institutional Effectiveness and Planning at Utah Valley University. He maintains research interests in higher education, information technology, and social theory, and teaches in the College of Humanities and Social Sciences at UVU. Johnson is the author of several articles on the politics of information technology and of Toward Information Justice: Principles, Policies, and Technologies, to be published later this year. He holds a Ph.D. in political science from the University of Wisconsin, and was previously Assistant Professor of Government and Philosophy at Cameron University.