Photo: Walter Baxter – licensed for reuse CC BY-SA 2.0

Brian Castellani (Kent State University)

When I attended university in 1984 as a psychology undergraduate in the States, the pathway to scientific literacy was pure and simple: you took a research methods course, followed by a statistics course or two, and that was it – you were prepared to do social science! Okay, if you were lucky, you could also take a qualitative methods or historical methods course, but my professors were pretty clear: the real science was quantitative methods and statistics.

Later, when I moved on to graduate school in clinical psychology and then medical sociology, little changed. Certainly, the statistics got more interesting and esoteric, which I very much liked. But the same old distinctions seemed dominant, with quantitative method and statistics holding the upper hand: hard science over soft science; quantitative method over qualitative method; math over metaphor; method over theory; representation over interpretation; experiment over description; prediction over understanding; variables over cases; on and on it went.

And why? Because, my quantitative professors argued, statistics (and pretty much it alone) made ‘sense’ of the complexity of social reality – what Warren Weaver, in his brilliant 1948 article, “Science and Complexity” called the disorganized complexity problem. According to Weaver, nuances aside, the problems of science can be organized, historically speaking, into three main types. The first are simple systems, comprised of a few variables and amenable to near-complete mathematical description – clocks, pendulums, basic machines. Second are disorganized complex systems, where the unpredictable microscopic behavior of a very large number of variables – gases, crowds, atoms, etc – make them highly resistant to simple formulas; requiring, instead, the tools of statistics. Finally are organized complex systems, where the interrelationships amongst a large number of variables, and the organic whole they create, determine their complexity – human bodies, immune systems, formal organization, social institutions, networks, cities, global economies, etc.

Such systems, given their emergent, idiographic and qualitative complexity, are not understood well using simple formulas or statistics. Needed, instead, are entirely new methods, grounded in the forthcoming age of the computer, which Weaver, in 1948, saw on the horizon. Also necessary is a more open and democratic science, grounded in interdisciplinary teamwork and exchange – both critical to understanding and managing organized complexity. Weaver puts it this way: “These new problems, and the future of the world depends on many of them, requires science to make a third great advance, an advance that must be even greater than the nineteenth-century conquest of problems of simplicity or the twentieth-century victory over problems of disorganized complexity. Science must, over the next 50 years, learn to deal with these problems of organized complexity” (1948, p. 540).

That was 1948. It is now 2014. So, what happened? Did Weaver’s prognosis about the future of science come true? Well, it all depends upon which area of science you are looking at. In the social sciences, the answer is definitely “No!” For example, a quick review of the methods courses taught to undergraduates in the social sciences makes it rather clear that, over the last seven decades, little has changed: from Oxford and Harvard to Stanford and Cambridge to, in fact, just about most universities and colleges, the pathway to scientific literacy in the social sciences remains pretty much the same: conventional quantitative methods and statistics, grounded in mechanistic and reductionist experimental or quasi-experimental design.

Now, don’t get me wrong, there are exceptions, such as the School of Political and Social Science, University of Melbourne, or the BSc in Sociology, London School of Economics and Political Science, or the Institut d’études politiques de Paris (known as Sciences Po), where undergraduates are given courses in critical thinking, applied research and interdisciplinary and mixed methods. And, in the UK, for example, there is a strong qualitative methods tradition. Still, even at the University of Michigan in the States, which boasts one of the most advanced methodological centers in the world – Center for the Study of Complex Systems – the undergraduate social science curriculum is pretty standard fare, and with heavy emphasis on the old distinctions and statistics; or, in the case of economics, linear mathematical modeling.

Still, I need to be clear. I am not saying that – pace Weaver – conventional statistics are benign or useless or wrong-headed! I am, after all, a quantitative methodologist and, when used correctly, conventional statistics are very powerful! I am also not saying that all the old distinctions are misguided. What I am saying, though, is that if you – like me – received a social science education in conventional statistics alone, your professors failed you. And, they failed you because they should have taught you five additional things.

Is Social Reality really that Disorganized?

First, they should have taught you that, of the three types of problems present to science, conventional statistics chose to view social reality as primarily disorganized.

In a nutshell, and without nuance, the history of statistics in the social sciences is one of great achievement but also error; and the basis for both is the belief that disorganized complexity constitutes the major challenge to social scientific inquiry. For conventional statisticians, the problem with social reality is that, at the microscopic level, its variables (like those of the natural sciences) are too numerous, dynamic, nonlinear and complex to be quantitatively modeled. Think, for example, of trying to predict the individual voting behavior of all 1.24 billion people living in India. Your model’s accuracy at the microscopic level would be, to put it mildly, very low indeed. If, however, you turned to the tools of statistics, with its laws of central tendency and its theories of probability, you might have a chance. Why? Because now you would be focusing on calculating macroscopic, aggregate, average behavior.

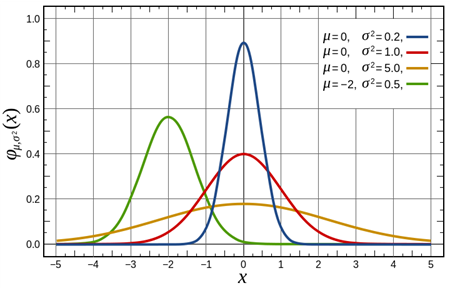

Remember the bell curve? Based on your chosen degree of accuracy, you would seek to

Figure 1: Bell (Gaussian) Shaped Curve (Wikipedia)

predict, in probabilistic terms, how most people in India are likely to vote – the majority area of the distribution. And, being a good quantitative social scientist, you also would develop as simplistic a causal model of voting as possible, what Capra and Luisi (2014) call mechanistic or reductionist social science. Treating voting as your dependent variable, you would build a linear model of influence, hoping to determine which independent variable(s) – e.g., political views, gender, religious affiliation, age, geographical location, etc – best explain most voting behavior.

And that’s it! That is the supposed brilliance (in two hundred words or less) of what Byrne (2013) critically calls the conventional quantitative program in the social sciences: (1) social reality is a form of disorganized complexity, which is best handled using the tools of statistics; (2) in terms of studying disorganized complexity, the goal is to explain majority, aggregate behavior in terms of probability theory and the macroscopic laws of averages; (3) to do so, one seeks to develop simple, variable-based linear models, in which variables are treated as ‘rigorously real’ measures of social reality; (4) model-in-hand, the goal is to identify, measure, describe and (hopefully) control or manage how certain independent variables impact one or more dependent variables of concern; (5) and, if done right, these models lead to reasonably linear explanations of why things happen the way they do; (6) which, in turn, leads to relatively straightforward policy recommendations for what to do about them.

All of the old distinctions are upheld and social reality is represented! What else is there? Well, actually, a lot – which takes us to our next point.

Is Social Reality really that Disorganized?

Second, they should have taught you that, along with Weaver (1948), there exists a very long list of scholars who do not think that the complexity of social reality is best viewed as disorganized and therefore only probabilistic. In fact, the list is so long that, to even mention a few is to invite critique for not listing others. Suffice to say that studying this list would consume (at minimum) a year’s worth of courses in your college philosophy department, where you would discuss such topics as the philosophy and sociology of science, post-positivism and pragmatism, feminism and feminist methodology, pragmatism and anti-positivism, critical realism and neo-pragmatism, eco-feminism and systems theory, social constructionism and social constructivism, 2nd order cybernetics and post-structuralism, qualitative method and historiography, ethnography and deconstructionism, actor-network theory and postmodernism. And, that is not it. Exploring this list, you would also learn that, despite conflicting differences, the general view held is that social reality is best viewed, in one form or another, as organized complexity.

Still, despite the overwhelming power of this list, it remains the case that quantitative methods education in the social sciences with its emphasis on disorganized complexity and its continuation of the old distinctions, particularly in the States, stands strong and assured; and with little signs of changing. In fact, the most common curricular response to the failures of statistics seems to be a push for even more statistics – as , for example, in the UK’s new Q-Step movement, which, like the States, seeks to address the quantitative deficit in the social sciences by teaching undergraduates and graduates more of the conventional quantitative programme in the social sciences !

Again, do not get me wrong. There are times when viewing the complexity of social reality as disorganized is useful. The problem, however, is that, increasingly, the social problems we currently face suggest otherwise – which takes us to our next point.

Is Social Reality really that Complex?

Third, they should have taught you that, as Weaver predicted in 1948, the organized complexity of the world got a whole heck of a lot more. And, it is not just social reality that got a lot more complex, but also the data we use to make sense of it! Scholars use such terms as post-industrialization, network society, and globalization to describe the former organized complexity; and cyberinfrastructure, data science, and big data to describe the latter! Whatever the term, the scholars involved in the critical study of globalization or big data make the same point: from ecological collapse and data warehouses filled with terabytes of information to global warming and an endless streaming of digital data to the instability of global financial markets and the threat of pandemics, we contend, now, with a data-saturated world of social problems and organized complexity far beyond the pale of conventional quantitative social science (See Capra and Luisi 2014).

Can Organized Complexity Be Modeled?

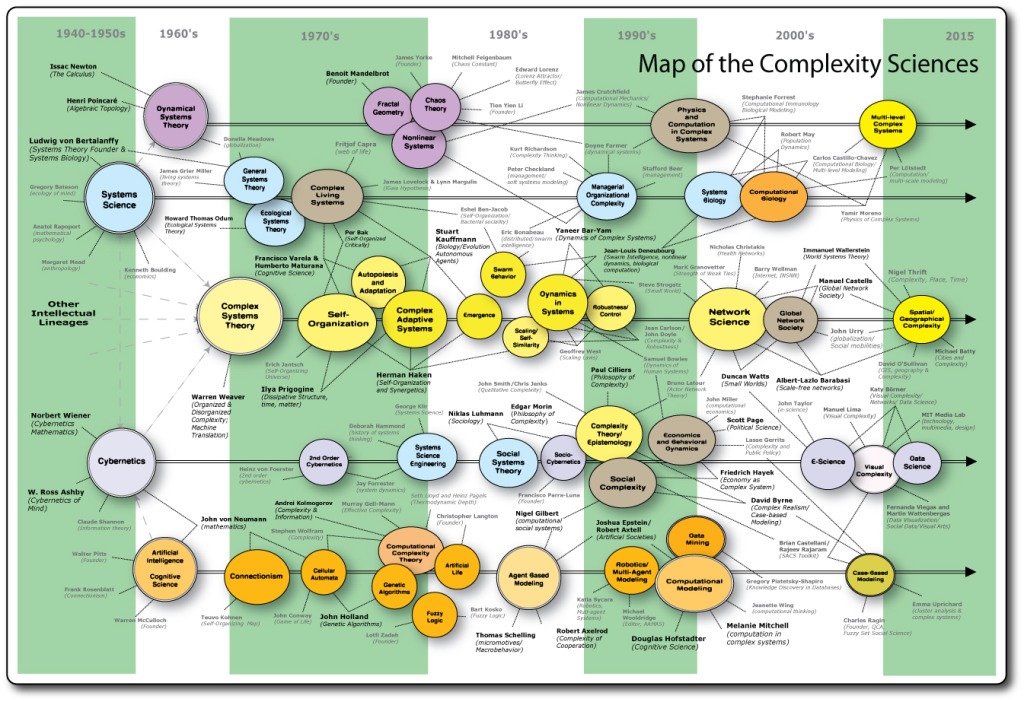

Fourth, they should have taught you that, over the last three decades, a revolution in methods, as Weaver also predicted, has taken place – thanks, in large measure, to the computer, computational algorithms, cyberinfrastructure and big data. The result, as my Map of the Complexity Sciences shows, is a revolution in computational methods.

And, what is more, this revolution in method contains some of the most highly innovative tools and techniques ever created, from geospatial modeling and complex network analysis to dynamical systems theory and nonlinear statistical mechanics to multi-agent modeling and artificial neural nets to cellular automata and data mining to data visualization and case-based modeling. And here is the kicker! As already suggested, these tools were created to more effectively manage the increased organized complexity of our social world and the data gathered to make sense of it, as well as model other dimensions of complexity that, traditionally, exist outside the domain of conventional social statistics – as in the case of complex networks, emergent behavior, qualitative causality, agency and nonlinear dynamics. And, what is more, many of these new methods do not ‘hold true’ to the old dualisms – hard science, for example, is seen as less distinct from soft; math is seen more as metaphor; qualitative methods become quantitative, and so forth (See Castellani and Rajaram 2012; Rajaram and Castellani 2013, 2014)

Can Organized Complexity Be Conceptualized As a Complex System?

Finally, they should have taught you that Weaver’s third great advance in science did, in fact, take place; which scholars generally refer to as the advance of the complexity sciences or, alternatively, complexity theory or complex systems theory (Byrne 2013; Byrne and Callaghan 2013; Capra and Luisi 2014; Castellani and Hafferty 2009; Mitchell 2009).

Also, as also predicted by Weaver, this ‘advance’ was principled largely on the study of organized complexity. Despite differences in the computational methods used – which I discussed above – the common view amongst complexity scholars is that social reality and the data used to examine it are best understood, methodologically speaking, in organized complex systems terms. In other words, social reality and data are best seen as self-organizing, emergent, nonlinear, evolving, dynamic, network-based, interdependent, qualitative and non-reductive. And that is not it. They also agree that, while complex social systems are real, no one method (especially statistics) can effectively identify, model, capture, control, manage or explain them. Instead, a multiplicity of mixed methods and perspectives are needed; along with an increased focus on critical and reflexive thinking, grounded in interdisciplinary, application-oriented teams. Does that sound familiar? It should, as it is what Weaver recommended back in 1948.

So, Why Didn’t You Learn these Things in Stats Class?

So – you are probably wondering – why were you not taught these things? There are a handful of interconnected reasons, which a number of scholars have identified (See, for example, Byrne and Callaghan 2014; the Gulbenkian Commission 1996; Savage and Burrows 2007, 2009; Reed and Harvey 1996; Uprichard 2013).

Some are disciplinary and others are professional. Here is a quick list: (1) most social scientists don’t receive training in computational modeling or complexity science; (2) to do so, they would have to cross the campus to the mathematics, physics and computer science departments; (3) doing so would mean learning how to work in mixed-methods and interdisciplinary teams, talking across intellectual boundaries and being comfortable in what one doesn’t know, overcoming the humanities-versus-math divide, etc; (4) in turn, social science departments would need to hire and promote scholars working at the intersection of complexity science, computational modeling and social science; (5) as well as loosen their entrenched and outdated frameworks of research excellence; (6) and, in turn, a similar advance would be required of social science journals, which remain largely ignorant, unsympathetic and even antagonistic to this work; (7) in turn, these advances would need to result in major curricular revision.

In terms of curriculum, it is not so much that the social sciences would need to be proficient in calculus, computational analysis, and nonlinear statistical mechanics! Hardly. Instead, an open learning environment would need to be created, where students could be introduced to new and innovative notions of complexity, critical thinking, data visualization and modeling, as well as the challenges of mixed-methods, interdisciplinary teamwork, global complexity, and big data! In short, the social sciences would need to be ‘opened-up,’ as Weaver called for in 1948 – which takes us to the crux of the problem!

Opening Up the Social Sciences?

While Weaver’s call to “open-up” the social sciences was incredibly prescient, it was neither the first nor the last. In fact – as shown on my above map – many of the scholars involved in the complexity sciences have echoed similar opinion, including many new scholars. Not all of these ‘calls,’ however, are on equal footing. A recent and widely publicized example – although entirely wrongheaded but well intended – is Christakis’s New York Times opinion piece, Let’s Shake Up the Social Sciences. While Christakis is a renowned network scientist, physician and sociologist, his call for opening the social sciences is misplaced, as he seeks to ground social inquiry in a reductionist, microscopic, lab-like, methods-driven social science, which is little more than a complexity science version of the conventional quantitative program in the social sciences.

Still, of the various calls echoed, perhaps the most famous is the Gulbenkian Commission on the future of the social sciences, chaired by Immanuel Wallerstein. Appointed to review the “present state of the social sciences” in relation to contemporary knowledge and need, the Commission began its report with a historical review, focusing (pointedly enough) on the same fifty-year time period as Weaver, circa 1945 to 1995. And, what conclusions did they reach? Pretty much the same as Weaver: the social sciences need to (1) re-organize themselves according to a post-disciplinary structure; (2) situate themselves in a complexity science framework; (3) embrace a mixed-methods toolkit, based on the latest advances in computational and complexity science method; and (3) work in interdisciplinary teams.

And what was the response? You guessed it. Nothing changed. Well, that is not entirely true. Changes have been taking place, but just not within mainstream social science. While conventional social science sits idly by – largely ignorant or indifferent – scholars from across the sciences are ‘opening up’ major domains of social scientific inquiry. The question, however, is in what ways and to what effect and at what cost? As Byrne and Callaghan catalogue in their recent book, Complexity Theory and the Social Sciences: The State of the Art (2014), there is a lot of work being done in the computational and complexity sciences that, unfortunately and despite the best of intentions, lacks a proper knowledge of social science and, as a result, has “gone off the deep end,” as they say!

One quick example is the whole big data push, which dangerously veers, in many instances, into a-theoretical modeling, where issues of agency and social structure are lost (Burrows and Savage 2014 or Uprichard 2013). Another is the whole social physics push, which is nothing more than old-school, hard-science-is-best reductionism, where the fundamental laws of social reality are sought through high-speed computers. Little do these researchers realize, but the call for a social physics goes all the way back to the 1800s. And what happened to that old-school 1800s call? It grew up and became what we now call sociology. (See Castellani 2014).

As these examples suggest, and as Duncan Watts, the famous network physicist and complexity scientist has pointed out in an article for the Annual Review of Sociology (2004), while the overwhelming majority of physicists, mathematicians and computational scientists are incredible technicians and methodologists, most are not very good social scientists. In turn, however – as I have hopefully made clear in this essay – by today’s standards, the overwhelming majority of social scientists are not very good technicians or methodologists. And, both sides are at fault for not extending their reach, and both are foolish for not doing so – and with all sorts of negative and unintended consequences for how we deal with the global social problems we currently face! Still, lots can be done to overcome this problem, from rethinking undergraduate methods education to reorganizing the social sciences. The problem, however, is that, judging from the state of things, it is highly unlikely anything will be done. Methods education in the social sciences will continue to fail its students.

Now, don’t get mad at the messenger just because you don’t like the message. After all, it’s been around since, at least, 1948.

References

Burrows, R. & Savage M. (2014) After the crisis? Big Data and the methodological challenges of empirical sociology. Big Data & Society, 1, 1-6.

Byrne, D. (2012). UK Sociology and Quantitative Methods: Are We as Weak as They Think? Or Are They Barking up the Wrong Tree?. Sociology, 46(1), 13-24.

Byrne, D & Callaghan, G. (2014). Complexity and the Social Sciences: The State of the Art. UK: Routledge.

Capra, F., & Luisi, P. L. (2014). The Systems View of Life: A Unifying Vision. Cambridge University Press.

Castellani, B. “The Limits of Social Engineering: A Complexity Science Critique.” Sociologyand Complexity Science Blog, (posted on 4/28/14)

Castellani, B. & Hafferty. F. (2009) Sociology and Complexity Science: A New Area of Inquiry. Germany: Springer.

Castellani, B., & Rajaram, R. (2012). Case-based modeling and the SACS Toolkit: a mathematical outline. Computational and Mathematical Organization Theory, 18(2), 153-174.

Rajaram, R., & Castellani, B. (2012). Modeling complex systems macroscopically: Case/agent‐based modeling, synergetics, and the continuity equation. Complexity, 18(2), 8-17.

Rajaram, R., & Castellani, B. (2014). The utility of nonequilibrium statistical mechanics, specifically transport theory, for modeling cohort data. Complexity.

Savage M and Burrows R (2007) The coming crisis of empirical sociology. Sociology 41(5): 885–899.

Savage M and Burrows R (2009) Some further reflections on the coming crisis of empirical sociology. Sociology 43(4):765–775.

Uprichard, E. (2013). Big Data, Little Questions? Discover Society, 1 October 2013

Watts, D. (2004) The “New” Science of Networks. Annual Review of Sociology 30, 243-270.

Weaver, W (1948). “Science and complexity,” American Scientist, 36: 536-544

Brian Castellani is a professor of sociology at Kent State University, where he also runs the Complexity in Health Group, as well as an adjunct professor of psychiatry at Northeast Ohio Medical University. He is internationally recognized for his work in computational and complexity science methods and their application to various topics in health and health care.